Inside Setu’s Bill Payments MCP: Engineering for Agentic Commerce

16 Dec 2025

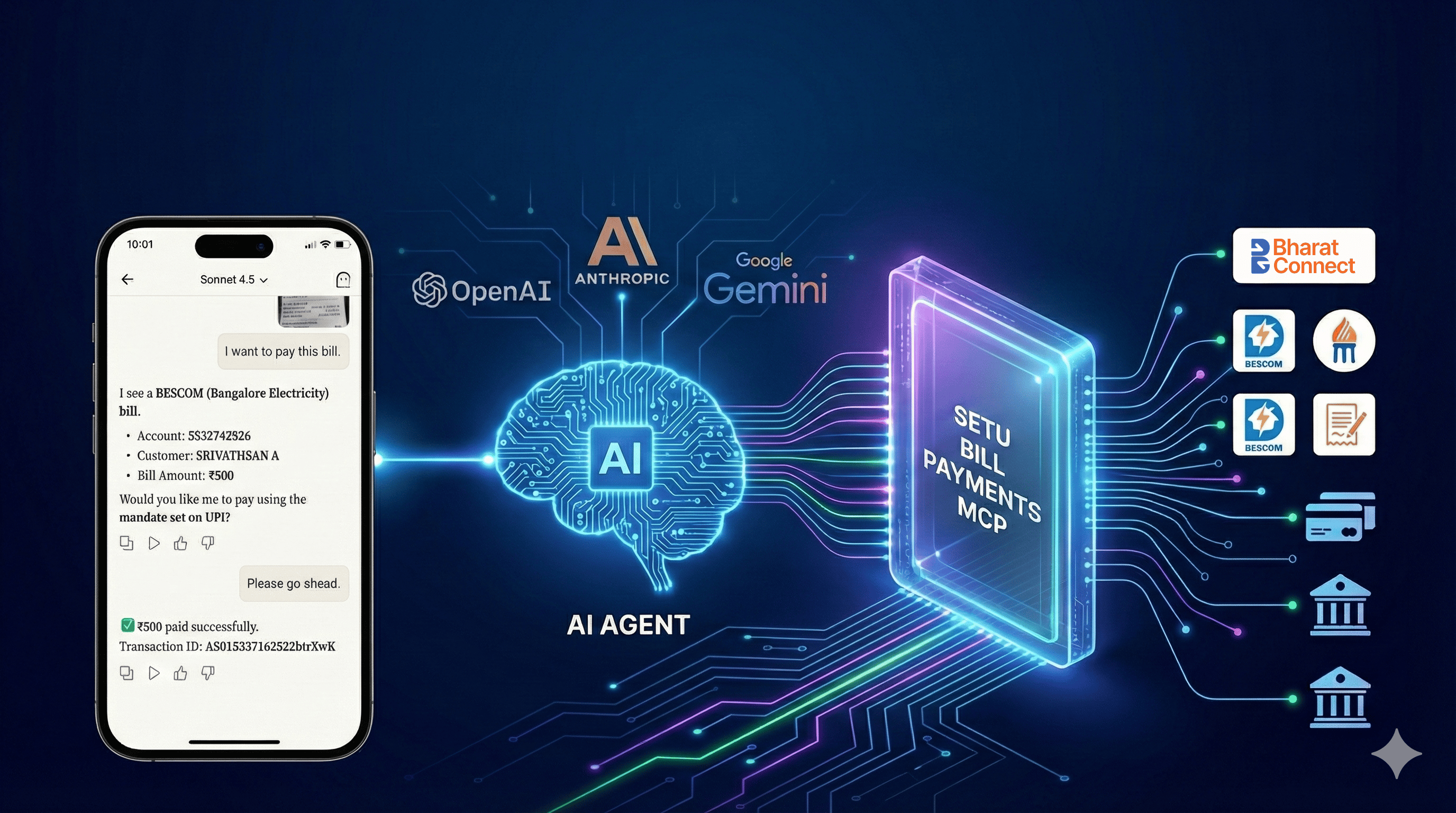

AI agents are increasingly being used to handle complex, repetitive, and multi-step workflows in our daily lives. Large Language Model (LLM) tools like OpenAI’s ChatGPT, Anthropic’s Claude, and Google’s Gemini now offer conversational interfaces that can not only retrieve information, but also take actions on behalf of users.

This shift naturally leads to Agentic Payments, where authorised AI agents execute payments, and Agentic Commerce, which further personalises and streamlines purchase decisions. With these developments in mind, we at Setu experimented with building Agentic Bill Payments.

What are agentic bill payments?#

Agentic Bill Payments is a next-generation bill payments platform built on an MCP (Model Context Protocol) server. It enables secure, AI-assisted payment execution through conversational interfaces such as chat or voice.

Bill payments can now be interpreted, reasoned about, and executed using natural language. The system understands user intent, resolves ambiguity, dynamically stitches multi-step workflows, and adapts flows in real time—capabilities that are difficult to pre-design in deterministic, screen-based applications.

Through this initiative, Setu is laying the foundation for the next generation of AI-enabled bill payments across the ecosystem.

When we first explored chat-based bill payments, we realised that the fastest way to test our hypotheses was to build an MCP server. MCPs integrate with popular LLM chat applications like ChatGPT and Claude and act as a standardised layer between LLM agents and core applications. This allows integrations to scale without building custom adapters for every agent.

Building on Setu’s core bill payments stack#

Our bill payments application was already production-ready. What we needed was a Bill Payments MCP on top of it—one that could translate existing functionality into a language-driven interface.

With this foundation in place, our engineering team began building the MCP server on top of our core BillPay application. This effort was supported by Setu’s strong product and engineering culture, where teams consistently experiment with emerging technologies.

That groundwork allowed us to extend MCP capabilities to one of our flagship offerings: Setu’s Bharat Connect Bill Payments.

We first invested time in deeply understanding the MCP specification—its design, constraints, and how it would integrate with our existing stack. Implementation began only after these fundamentals were clear.

While one team focused on MCP development, another prepared the core application to support an external front-end that we do not control. This included user management, authentication flows, and ensuring consistent behaviour across LLM-based interfaces and existing channels.

After several brainstorming sessions, the teams got to work. This was followed by the usual late-night resolution calls that come with first-of-its-kind launches. Our goal was simple: ensure the MCP could support everything our core application already supports.

Designing MCP tools for bill payments#

To achieve this, we built MCP tools that closely mirrored the APIs of our BillPay product. These tools define how an LLM agent can interact with the system.

For example, we created tools to:

- Fetch billers available on Bharat Connect

- Retrieve bill amounts using customer details

- Initiate bill payments

- Check payment status

Each tool is clearly defined within the MCP specification, including:

- Its purpose

- When it should be triggered

- The underlying API

- The expected input schema

These definitions allow LLM agents to understand user intent and reliably invoke the correct tool.

Example: a sample MCP tool definition#

Below is an example of how a tool definition is structured:

{"name": "searchFlights","description": "Search for available flights","inputSchema": {"type": "object","properties": {"origin": {"type": "string","description": "Departure city"},"destination": {"type": "string","description": "Arrival city"},"date": {"type": "string","format": "date","description": "Travel date"}},"required": ["origin", "destination", "date"]}}

Early validation and cross-LLM testing#

Within a few days—and after a successful first demo—we knew we were moving in the right direction. We then explored how the MCP behaved across different LLM clients.

A key focus was reducing friction between a user discovering the Setu Bill Payments MCP and completing their first transaction.

- We moved away from OTP entry inside the chat

- Users are redirected to a Setu interface for authentication

- We evaluated which workflows could remain probabilistic and which needed to be deterministic

Balancing probabilistic and deterministic workflows#

Some workflows benefit from probabilistic reasoning. For example, when a user asks to pay an electricity bill for their home in Bangalore, the LLM can infer the correct biller, identify required parameters, and dynamically guide bill fetching.

Other workflows are inherently deterministic. Status checks are one such case. A single bill payment spans multiple system legs.

- The payment is first executed via UPI

- Only after UPI success does a BBPS transaction get created

Instead of exposing separate tools for UPI and BBPS status checks, we combined this logic into a single deterministic flow. The MCP first checks the UPI status and then conditionally fetches the BBPS status.

We stress-tested the agent with a wide range of prompts, including:

- “What did I spend last month?”

- “Why is my electricity bill higher this summer?”

- “Show me a chart of my FasTAG expenses over the past six months.”

The agent responded by pulling transaction history, analysing spending patterns, generating visual breakdowns, and offering contextual insights. It flagged increased electricity usage during Bangalore’s summer months and identified FasTAG recharge patterns aligned with weekend travel.

Emergent workflows and agentic reasoning#

Some of the most interesting behaviours came from unplanned workflows. When asked, “Do I have any bills due?”, the agent:

- Fetches all saved billers

- Pulls fresh bills

- Checks payment status

- Presents a clean summary of pending and paid bills

These workflows were never explicitly programmed. They emerged from the agent’s ability to intelligently chain tools together. We tested hundreds of queries across ChatGPT and Claude, refining prompts, probing edge cases, and tuning tool responses.

What’s next: building on a strong foundation#

Through this process, we realised we had only scratched the surface. Many more use cases can be unlocked by enriching the Setu Bill Payments MCP and adding further technical sophistication.

As MCP standards and LLM agents evolve, we can move faster—confident that the foundation is already in place. The payments ecosystem in India is ready. The infrastructure exists. The rails are mature. And users are open to new experiences.

This is just the beginning.

If you want to see what agentic bill payments can look like in practice, sign up for early access. Sign up for early access